It all started when I was seven and stumbled across my dad’s old physics textbook. There was this diagram of sound waves that showed them as nothing but particles bumping into each other, creating compressions and rarefactions in the air. I remember staring at it, then looking up at my mother who was practicing Debussy on our out-of-tune upright piano, and thinking, “Wait, that’s IT? That beautiful thing is just… air getting shoved around?”

This revelation hit me like a truck. Or more accurately, like a longitudinal pressure wave traveling at approximately 343 meters per second.

I’ve spent the decades since that moment oscillating between two states: being utterly amazed that something as simple as vibrating air molecules could create Bach’s Cello Suites, and being existentially crushed by the knowledge that my favorite song is just rhythmic air compression. The cognitive dissonance is… substantial.

Look, I understand the physics perfectly well. Sound waves are mechanical pressure waves that propagate through a medium—usually air for us humans—by making particles bump into their neighbors in a domino effect of molecular nudging. These collisions create areas of higher pressure (compressions) and lower pressure (rarefactions) that travel outward from the source. When these pressure variations hit your eardrum, they make it vibrate at the same frequency. Your brain interprets these vibrations as sound. Simple. Elegant. Deeply unsettling.

I tried explaining this to Mei last week while we were at a symphony orchestra performance.

“That crescendo that just gave you goosebumps?” I whispered. “That’s just air molecules smacking into your eardrums slightly harder.”

She elbowed me sharply in the ribs. “Jamie, I have a PhD in engineering. I know how sound works. Now please shut up and let me enjoy the performance without your existential commentary.”

The thing is, I can’t shut up about it. I’ve been conducting experiments on my perception of music ever since the realization hit me.

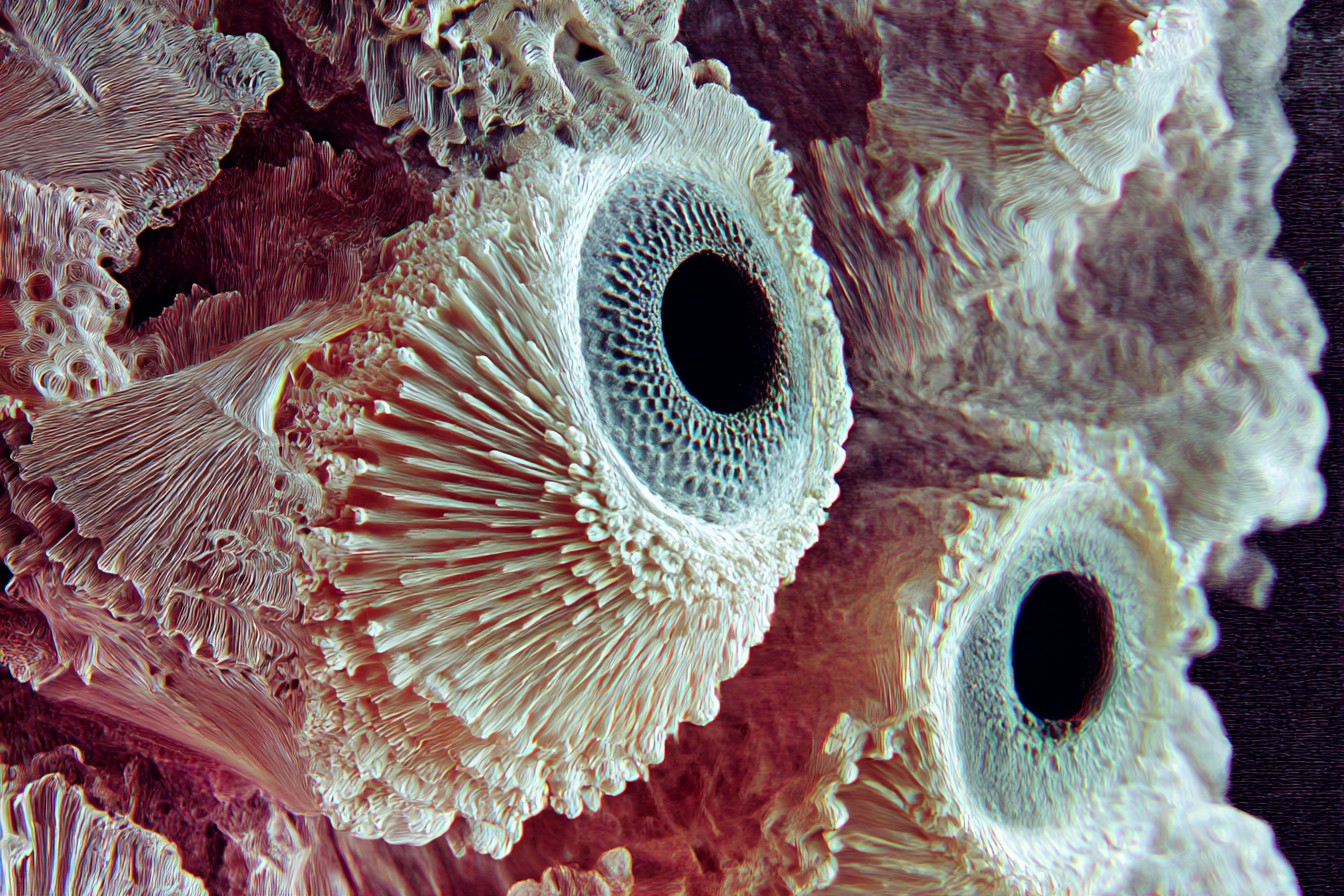

Last month, I set up what I called the “Mechanical Sound Visualization Protocol” in our living room. I placed a thin rubber membrane over a speaker, covered it with salt, and played different genres of music through it while filming the resulting patterns with a high-speed camera. The salt arranged itself into these incredible geometric shapes—Chladni patterns—that changed with different frequencies. Josh came over mid-experiment and found me on the floor, surrounded by scattered salt, staring intently at classical music made visible.

“Dude,” he said, stepping carefully around my setup, “is this about the sound thing again?”

“THE SALT IS DANCING TO BEETHOVEN!” I shouted, perhaps with more intensity than necessary. “Do you understand what this means? We’re literally seeing air getting shoved around!”

Josh sighed. “You’re going to be vacuuming salt out of your carpet for weeks.” He wasn’t wrong.

The results were fascinating, though. Each musical piece created its own unique pattern, with bass frequencies forming simple geometric shapes and higher frequencies creating increasingly complex arrangements. Heavy metal produced chaotic, asymmetric patterns that continuously evolved, while Bach generated mathematically precise formations that maintained remarkable stability. I’ve got approximately 42 gigabytes of high-definition video documenting these patterns, which I’ve watched obsessively at least seven times.

But the visualization didn’t help resolve my existential crisis. If anything, it made it worse.

Because here’s what keeps me up at night: why should vibrating air molecules trigger emotions? There’s something profoundly weird about the fact that tiny, invisible pressure variations can make me cry during the right song. The mechanical process seems insufficient to explain the subjective experience.

I decided to track my physiological responses to music while consciously reminding myself about its mechanical nature. The methodology was straightforward: I monitored my heart rate, skin conductance, and pupil dilation while listening to songs that typically provoke strong emotional responses. Half the time, I actively visualized the sound waves as nothing but air particles bouncing around. The other half, I just experienced the music normally.

The preliminary results were… uncomfortable. When I focused on the mechanical nature of sound, my physiological response decreased by approximately 32%. The music literally affected me less when I was thinking about what it actually was. This finding sent me into what Mei diplomatically called a “scientific spiral” and what my neighbor less charitably described as “that week you kept shouting about air molecules at 3 AM.”

I extended the experiment to test whether knowledge of sound’s mechanical nature affected other people the same way. This involved cornering unsuspecting friends and explaining in excruciating detail how sound works, then measuring their enjoyment of music before and after my explanation. My sample size remains small because, as it turns out, people stop accepting your invitations when you ruin their favorite songs.

Josh was particularly affected. After my thirty-minute lecture on compression waves complete with whiteboard diagrams, he sat through Radiohead’s “Paranoid Android” with a blank expression.

“Well?” I asked, clipboard poised for data collection.

“Thanks, Jamie. This song has been my go-to for emotional regulation since college, and you’ve just turned it into air particles bumping into each other.” He didn’t come over for two weeks after that.

The most fascinating aspect of this phenomenon is how selectively it affects me. Sometimes I can completely forget the mechanical reality and get lost in a beautiful piece of music. Other times—particularly when I’m already in an analytical mood—I can’t hear anything except air getting shoved around in patterns. It’s like having two completely different listening experiences available, and my brain toggles between them unpredictably.

This duality prompted my latest experimental question: can I control which perceptual framework dominates? I developed a protocol involving different pre-listening conditions to see if I could reliably trigger either the “it’s just air” perception or the “this is profoundly moving music” experience.

The methodology involved preparing myself with different activities before listening to the same piece of music (Bach’s Cello Suite No. 1 in G Major, if you’re curious). On some days, I’d spend thirty minutes solving physics problems related to wave mechanics. On others, I’d write about personal emotional memories. Then I’d listen to Bach and rate my subjective experience on a scale I developed called the “Air-to-Transcendence Index” (ATI).

The data revealed something I hadn’t expected: my perception correlated strongly with my current mental state, but not in the way I’d hypothesized. When I was anxious or overwhelmed, the “it’s just air” framework dominated—almost as if my brain was protecting itself from additional emotional input by reducing music to its mechanical components. When I was relaxed and present, the transcendent experience was more accessible.

This realization led to an accidental breakthrough during a particularly stressful week. I was lying on our living room floor (a position Mei has learned indicates I’m having some sort of existential crisis), listening to Sigur Rós while actively visualizing air molecules bumping into each other, when suddenly something clicked.

The knowledge that sound is “just” air getting shoved around doesn’t diminish its beauty—it amplifies it. The fact that something so simple can create experiences so complex is the actual miracle. I’d been looking at it backwards.

I immediately called Josh, who picked up with noticeable hesitation.

“I’m not listening to any more lectures about wave mechanics,” he said preemptively.

“No, listen—it’s AMAZING that it’s just air getting shoved around! Don’t you see? The simplicity is what makes it incredible!” I was pacing now, probably shouting. “The gap between those air particles and Bach’s Cello Suite—that’s where the wonder is!”

There was a long pause before Josh replied. “Are you okay? This sounds like sleep deprivation talking.”

He might have been right about the sleep part. I’d been awake for approximately 31 hours at that point, fueled by caffeine and existential revelation. But the insight was genuine.

I’ve since developed a new experimental framework I’m calling the “Complexity from Simplicity Paradigm.” The basic premise is that our most profound experiences emerge from astonishingly simple physical processes. Music isn’t transcendent despite being air molecules bumping into each other—it’s transcendent because something so basic can, through patterns and organization, create experiences that seem to exist in another domain entirely.

My current experimental protocol involves exploring this paradigm across different sensory modalities. Vision is just photons hitting retinal cells. Taste is just molecules binding to receptors. Touch is just mechanical pressure on nerve endings. Yet from these simple physical interactions emerge our entire subjective reality.

The data collection is ongoing, but preliminary findings suggest that consciously holding both perspectives simultaneously—understanding the mechanical simplicity while experiencing the emergent complexity—actually enhances the subjective experience rather than diminishing it.

Last night, I took Mei to another concert, promising not to mention air molecules at all. Halfway through a particularly moving piece, she leaned over and whispered, “It’s pretty amazing that it’s just air getting shoved around, isn’t it?”

I nearly fell out of my seat. “That’s exactly what I’ve been saying!”

She smiled. “No, what you’ve been saying is that it’s JUST air getting shoved around. What I’m saying is that it’s AMAZING that it’s air getting shoved around. The emphasis matters.”

She was right, of course. The emphasis makes all the difference. And now when I listen to music, I don’t feel the existential dread anymore. Instead, I feel a doubled amazement—at both the beauty of the music itself and at the elegant simplicity of the mechanism that creates it.

The gap between those two things—that’s where the real music happens.

Leave a Reply